Introduction:

In today’s data-driven era, the exponential growth of data has transformed the way organizations operate and make decisions. The sheer volume, velocity, and variety of data generated require sophisticated tools and solutions to extract meaningful insights. Consequently, big data capabilities tools have emerged as the backbone of this transformative process, enabling businesses to harness the power of data for strategic decision-making, innovation, and gaining a competitive edge.

Moreover, these tools play a pivotal role in processing and analyzing vast datasets that traditional databases and analytics tools struggle to manage. The significance of big data tools lies in their ability to uncover patterns, trends, and correlations within massive datasets, providing valuable insights that drive informed decision-making. This not only enhances operational efficiency but also positions organizations to stay ahead in the ever-evolving landscape of data analytics.

Navigating the Big Data Ecosystem: An Overview

As organizations embrace digital transformation, they encounter diverse data types from various sources, including social media, sensors, and IoT devices. Big data tools are essential for ingesting, processing, and extracting actionable intelligence from this diverse and often unstructured data. Moreover, they empower data scientists, analysts, and decision-makers to derive meaningful conclusions, optimize processes, and identify opportunities for growth.

The need for effective big data solutions is underscored by the demands of real-time analytics, predictive modeling, and the imperative to stay agile in an ever-changing business landscape. Whether it’s streamlining operations, improving customer experiences, or gaining a deeper understanding of market trends, big data tools provide the technological foundation for organizations to turn raw data into strategic assets.

In this blog, we’ll delve into a big data capabilities through comparative analysis of prominent big data tools offered by Azure Synapse, Azure Databricks, and Data Factory exploring their features, capabilities, and how they address the evolving challenges of managing and extracting value from massive datasets in today’s dynamic business environment.

Azure Synapse: The Unified Analytics and Data Integration Hub

Azure Synapse Analytics transcends conventional boundaries by merging enterprise data warehousing and Big Data analytics into one coherent service. Furthermore, its unique blend allows for querying data with serverless, on-demand, or provisioned resources at an unparalleled scale. In addition, this synergy between data warehousing and Big Data analytics is designed to provide a unified experience across data ingestion, preparation, management, and serving.

For organizations seeking immediate business intelligence and machine learning needs, Azure Synapse Analytics is a robust choice. Moreover, it bridges the gap between these two worlds, offering a versatile ecosystem for analytics. Whether you require ad-hoc querying, data preparation, or advanced analytics, Synapse has you covered.

Azure Data Bricks: Empowering Data Science and Machine Learning

A fully managed Apache Spark service that can be used for data engineering, data science, and machine learning. It can be used to process large amounts of data quickly and easily. Azure Databricks is a good choice for businesses that need to perform complex data analysis or machine learning tasks.

It empowers you to process massive amounts of data using Apache Spark, a powerful distributed computing engine. Azure Databricks is perfect for big data processing, machine learning, and interactive data exploration. It enables data scientists and engineers to collaborate efficiently and derive valuable insights from complex datasets.

Azure Data Factory: Orchestrating Data Pipelines at Scale

In the realm of big data, orchestrating seamless data workflows is not just a necessity; it’s a strategic imperative. Azure Data Factory stands tall as a robust and versatile solution, empowering organizations to orchestrate data pipelines at scale with unparalleled efficiency and reliability.

Azure Data Factory serves as the conductor, orchestrating the movement and transformation of data across diverse sources and destinations. Its ability to integrate seamlessly with a multitude of data stores, both on-premises and in the cloud, provides the flexibility needed for modern data architectures.

Face-off: Azure Synapse vs. Azure Databricks vs. Data Factory

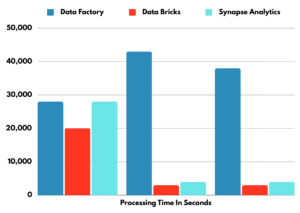

In the ever-evolving landscape of big data tools, the face-off between Azure Synapse, Azure Databricks, and Data Factory emerges as a critical juncture for organizations navigating the data-driven future. Let’s dissect the strengths, use cases, and unique features of each contender in this heavyweight bout. For this, I ran the same use case three times on these platforms. Each time I got different results based on their performance and cost, which is clearly shown in the graphs below.

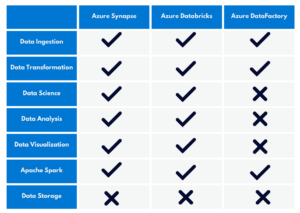

Detail Comparison:

Spark Cluster:

Performance Measurement:

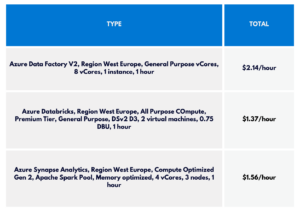

Costing Comparison:

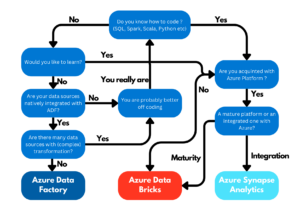

Decision Time: Choosing the Right Tool for the Job

-

If Unified Analytics and Scalability are Key: Azure Synapse takes the lead.

-

For Data Science and Advanced Analytics: Azure Databricks steals the spotlight.

-

When Orchestration and Scalable Pipelines Matter: Azure Data Factory emerges as the champion.

Conclusion:

In the subsequent rounds of big data capabilities and their comparative analysis, we’ll delve deeper into each contender, exploring features, use cases, and real-world applications. The big data landscape is vast, exciting, and constantly evolving. Embrace the journey, experiment fearlessly, and stay curious. Your exploration of these tools is not just an exploration of technology; it’s a journey toward transforming data into a strategic asset that propels your organization to new heights of success. Happy exploring!